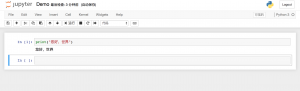

最近老是碰上MySql报错:1203:User already has more than ‘max_user_connections’ active,之前都没出现过,感觉应该是慢查询导致的。向运维拷贝慢日志分析,慢日志开、启配置参考这里。

拷贝出来的日志很大,需要按故障时间点做一下切割,以便缩小排查范围。按照这里提供的cutlogbytime.pl脚本运行却报错

[vagrant@centos64 mysql-log-filter-1.9]$ ./cutlogbytime.pl slow.log 1443103200 1443117600 > yestoday.log

: command not foundline 1:

: command not foundline 4:

./cutlogbytime.pl: line 5: use: command not found

: command not foundline 5:

./cutlogbytime.pl: line 6: use: command not found

: command not foundline 6:

: command not foundline 7:

'/cutlogbytime.pl: line 8: syntax error near unexpected token `{

'/cutlogbytime.pl: line 8: `if (@ARGV<2){

去掉顶行的空格后再运行,还是报错

[vagrant@centos64 mysql-log-filter-1.9]$ ./cutlogbytime.pl slow.log 1443103200 1443117600 > today.log

-bash: ./cutlogbytime.pl: /usr/bin/perl^M: bad interpreter: No such file or directory

最后参考stackoverflow上面的答案更改运行方式为Perl(而不是shell),就可以了。

[vagrant@centos64 mysql-log-filter-1.9]$ perl cutlogbytime.pl slow.log 1443103200 1443117600 > today.log

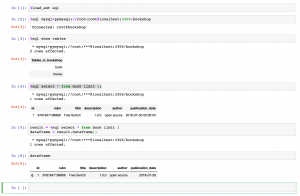

利用mysqlslowdump(perl脚本)来分析日志,-s参数表示排序方式:r表示影响行数(Rows),t表示耗时(Time),c表示查询次数(Count)

[vagrant@entos64 mysql-log-filter-1.9]$ perl mysqldumpslow.pl -s r -t 10 today4.log

Reading mysql slow query log from today4.log

Count: 1 Time=190.48s (190s) Lock=0.00s (0s) Rows=21829854.0 (21829854), xx[xxxx]@[192.168.10.139]

SELECT /*!N SQL_NO_CACHE */ * FROM `errormessage`

Count: 32791 Time=40.95s (1342865s) Lock=0.05s (1512s) Rows=1.0 (32791), xx[xxxx]@10hosts

select * from connectinfo where ID=N and AppType=N ORDER BY CreateDatetime DESC LIMIT N

Count: 3 Time=3.71s (11s) Lock=0.02s (0s) Rows=300.0 (900), xx[xxxx]@2hosts

select SeverName from errormessage where ID='S' and ServerType=N and level=N and MsgType <= N

第一个语句返回行数21829854,查看具体慢日志,之后需要插入这张表的进程均处于等待状态。

# Time: 150924 1:03:12

# User@Host: xx[xxxx] @ [192.168.10.139] Id: 1493761

# Query_time: 190.479062 Lock_time: 0.000000 Rows_sent: 21829854 Rows_examined: 21829854

SET timestamp=1443027792;

SELECT /*!40001 SQL_NO_CACHE */ * FROM `errormessage`;

# Time: 150924 1:03:14

# User@Host: xx[xxxx] @ [192.168.10.168] Id: 1498010

# Query_time: 59.669817 Lock_time: 57.159403 Rows_sent: 0 Rows_examined: 0

SET timestamp=1443027794;

insert into errormessage (`ID`,`ServerType`,`MsgType`,`Level`,`dev`,`content`,`EventTime`,`SeverName`) values ( '1217', '3', '4', '4', '827', 'erc:start erc error,songid=46243,keymd5=ee1275b26762e85a7f00e9890bdc092e,ercmd5=abbc3ea9102dbd003b7aa0547dcbf6fa', '2015-09-23 21:49:27', '192.168.15.117');

# User@Host: xx[xxxx] @ [192.168.10.205] Id: 1494756

# Query_time: 157.211158 Lock_time: 154.673647 Rows_sent: 0 Rows_examined: 0

SET timestamp=1443027794;

insert into errormessage (`ID`,`ServerType`,`MsgType`,`Level`,`dev`,`content`,`EventTime`,`SeverName`) values ( '865', '3', '1', '2', '106', '检测正常!', '2015-09-24 01:01:18', '192.168.0.33');

# User@Host: xx[xxxx] @ [192.168.10.213] Id: 1496479

# Query_time: 100.733230 Lock_time: 98.210902 Rows_sent: 0 Rows_examined: 0

SET timestamp=1443027794;

insert into errormessage (`ID`,`ServerType`,`MsgType`,`Level`,`dev`,`content`,`EventTime`,`SeverName`) values ( '2472', '3', '2', '4', '809', 'videoseripnoconfig', '2015-09-24 01:02:26', '192.168.0.18');

分析这几天的日志,发现故障时间点附近都是这个语句引起后面的SQL堵塞。原来是每天早上1点开始备份并同步全表数据,锁住了这个表导致后面的所有这个表的insert操作处于等待状态。mysqldump应该使用–single-transaction来避免锁表,类似下面这个

mysqldump –uuser -p --skip-opt -q -R --single-transaction --default-character-set=utf8 --master-data=2 --create-option --no-autocommit –S ${sock} -B ${DBName} > backup.sql

但是这样仍然是全表扫描进行备份,如果能够增量备份的话,影响要小很多,或者数据做冷热/新旧之分,定期将新(每天)/热数据转入旧(历史)/冷数据中。后面运维的解决方案是:升级数据库机器从虚拟机变为实体机,配置从机,并从从机进行备份同步。

上面mysqlslowdump使用影响行数来排序,事实上用另外两个类型(时间,次数)分析结果是connectinfo比较频繁,一直以来都认为是这个表的操作引起的。这里还尝试了其他工具来分析,使用mysqlsla.pl进行分析,相关参数选项参考这里。

[vagrant@centos64 mysql-log-filter-1.9]$ perl mysqlsla.pl today.log

Auto-detected logs as slow logs

Report for slow logs: today4.log

60.57k queries total, 17 unique

Sorted by 't_sum'

Grand Totals: Time 5.38M s, Lock 3.22M s, Rows sent 21.86M, Rows Examined 184.46M

______________________________________________________________________ 001 ___

Count : 25.59k (42.24%)

Time : 3905525.574451 s total, 152.643069 s avg, 113.07488 s to 2720.338946 s max (72.64%)

95% of Time : 3260112.482495 s total, 134.12789 s avg, 113.07488 s to 282.366041 s max

Lock Time (s) : 3168076.975558 s total, 123.820721 s avg, 108.548105 s to 311.639359 s max (98.45%)

95% of Lock : 2961933.212121 s total, 121.860167 s avg, 108.548105 s to 123.487106 s max

Rows sent : 0 avg, 0 to 0 max (0.00%)

Rows examined : 54 avg, 0 to 4.92k max (0.75%)

Database :

Users :

xx@ 192.168.10.147 : 10.65% (2724) of query, 10.26% (6215) of all users

xx@ 192.168.10.209 : 10.33% (2643) of query, 10.16% (6156) of all users

xx@ 192.168.10.205 : 10.16% (2599) of query, 9.97% (6036) of all users

xx@ 192.168.10.211 : 10.13% (2591) of query, 9.98% (6042) of all users

xx@ 192.168.10.207 : 9.93% (2541) of query, 9.95% (6024) of all users

xx@ 192.168.10.161 : 9.83% (2515) of query, 9.84% (5960) of all users

xx@ 192.168.10.149 : 9.81% (2510) of query, 9.95% (6028) of all users

xx@ 192.168.10.215 : 9.76% (2498) of query, 9.85% (5963) of all users

xx@ 192.168.10.168 : 9.71% (2485) of query, 9.69% (5868) of all users

xx@ 192.168.10.213 : 9.69% (2480) of query, 9.66% (5851) of all users

Query abstract:

SET timestamp=N; UPDATE connectinfo SET devicetag='S', connectipaddress='S', updatedatetime=now() WHERE ID=N AND apptype=N;

Query sample:

SET timestamp=1443027797;

update connectinfo set DeviceTag='1070A416AF000000', ConnectIPAddress='60.174.116.165', UpdateDatetime=now() where ID=5358 and AppType=0;

______________________________________________________________________ 002 ___

Count : 32.79k (54.14%)

Time : 1344378.871914 s total, 40.99841 s avg, 2.000747 s to 1944.548192 s max (25.01%)

95% of Time : 587407.556704 s total, 18.85678 s avg, 2.000747 s to 233.465042 s max

Lock Time (s) : 1512.917798 s total, 46.138 ms avg, 76 ▒s to 114.302 ms max (0.05%)

95% of Lock : 1414.978902 s total, 45.423 ms avg, 76 ▒s to 50.514 ms max

Rows sent : 1 avg, 1 to 1 max (0.15%)

Rows examined : 4.92k avg, 4.92k to 4.92k max (87.41%)

Database :

Users :

xx@ 192.168.10.209 : 10.24% (3359) of query, 10.16% (6156) of all users

xx@ 192.168.10.149 : 10.16% (3331) of query, 9.95% (6028) of all users

xx@ 192.168.10.147 : 10.11% (3315) of query, 10.26% (6215) of all users

xx@ 192.168.10.211 : 10.03% (3288) of query, 9.98% (6042) of all users

xx@ 192.168.10.207 : 10.02% (3285) of query, 9.95% (6024) of all users

xx@ 192.168.10.161 : 9.97% (3268) of query, 9.84% (5960) of all users

xx@ 192.168.10.215 : 9.96% (3266) of query, 9.85% (5963) of all users

xx@ 192.168.10.205 : 9.92% (3254) of query, 9.97% (6036) of all users

xx@ 192.168.10.168 : 9.86% (3234) of query, 9.69% (5868) of all users

xx@ 192.168.10.213 : 9.73% (3191) of query, 9.66% (5851) of all users

Query abstract:

SET timestamp=N; SELECT * FROM connectinfo WHERE ID=N AND apptype=N ORDER BY createdatetime DESC LIMIT N;

Query sample:

SET timestamp=1443027795;

select * from connectinfo where ID=7646 and AppType=0 ORDER BY CreateDatetime DESC LIMIT 1;

______________________________________________________________________ 003 ___

Count : 842 (1.39%)

Time : 66663.314786 s total, 79.172583 s avg, 2.011408 s to 673.604537 s max (1.24%)

95% of Time : 56684.989954 s total, 70.944919 s avg, 2.011408 s to 193.623235 s max

Lock Time (s) : 48221.988255 s total, 57.27077 s avg, 69 ▒s to 185.402303 s max (1.50%)

95% of Lock : 40627.196184 s total, 50.847555 s avg, 69 ▒s to 166.67704 s max

Rows sent : 0 avg, 0 to 0 max (0.00%)

Rows examined : 0 avg, 0 to 0 max (0.00%)

Database :

Users :

xx@ 192.168.10.207 : 11.64% (98) of query, 9.95% (6024) of all users

xx@ 192.168.10.205 : 11.28% (95) of query, 9.97% (6036) of all users

xx@ 192.168.10.213 : 10.93% (92) of query, 9.66% (5851) of all users

xx@ 192.168.10.161 : 10.45% (88) of query, 9.84% (5960) of all users

xx@ 192.168.10.149 : 10.33% (87) of query, 9.95% (6028) of all users

xx@ 192.168.10.211 : 9.74% (82) of query, 9.98% (6042) of all users

xx@ 192.168.10.147 : 9.38% (79) of query, 10.26% (6215) of all users

xx@ 192.168.10.215 : 9.38% (79) of query, 9.85% (5963) of all users

xx@ 192.168.10.168 : 9.03% (76) of query, 9.69% (5868) of all users

xx@ 192.168.10.209 : 7.84% (66) of query, 10.16% (6156) of all users

Query abstract:

SET timestamp=N; INSERT INTO errormessage (id,servertype,msgtype,level,dev,content,eventtime,severname) VALUES ( 'S', 'S', 'S', 'S', 'S', 'S', 'S', 'S')1;

使用mysqlsla可以看SQL语句的执行数量/比例,影响行数,用户,占比等。,从这里看很可能认为是connectinfo表(95%以上)引起,SHOW PROCESSLIST也是如此 。

由于这个文件我是单独下载回来的,运行mysqlsla.pl时候碰到很多错误,逐个安装解决了

[vagrant@centos64 mysql-log-filter-1.9]$ perl mysqlsla.pl today.log

Can't locate Time/HiRes.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at mysqlsla.pl line 2092.

BEGIN failed--compilation aborted at mysqlsla.pl line 2092.

#解决方法

[vagrant@centos64 mysql-log-filter-1.9]$ sudo yum install perl-Time-HiRes

[vagrant@centos64 mysql-log-filter-1.9]$ perl -MCPAN -e 'install DBI'

Can't locate CPAN.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .).

BEGIN failed--compilation aborted.

#解决方法

[vagrant@centos64 mysql-log-filter-1.9]$ sudo yum install perl-DBI

正确的方法是应该检测对应根目录下面的Makefile.PL,比如

[vagrant@centos64 percona-toolkit-2.2.15]$ perl Makefile.PL

#如果报以下错误,需要先安装对应模块,简单点就是

#sudo yum install perl-devel

Can't locate ExtUtils/MakeMaker.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at Makefile.PL line 1.

BEGIN failed--compilation aborted at Makefile.PL line 1.

然后安装对应模块,参考这里。

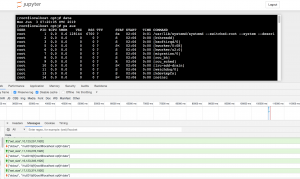

使用Percona公司的工具pt-query-digest来分析也得到了同mysqlsla类似的结果

[vagrant@vagrant-centos64 bin]$ pt-query-digest ../../today4.log

# 9.8s user time, 700ms system time, 21.05M rss, 73.66M vsz

# Current date: Mon Oct 5 05:52:01 2015

# Hostname: vagrant-centos64.vagrantup.com

# Files: ../../today.log

# Overall: 60.57k total, 17 unique, 4.54 QPS, 402.68x concurrency ________

# Time range: 2015-09-23 22:17:29 to 2015-09-24 02:00:00

# Attribute total min max avg 95% stddev median

# ============ ======= ======= ======= ======= ======= ======= =======

# Exec time 5376198s 2s 2720s 89s 258s 118s 57s

# Lock time 3217840s 0 312s 53s 118s 60s 48ms

# Rows sent 20.85M 0 20.82M 361.00 0.99 84.46k 0.99

# Rows examine 175.91M 0 20.82M 2.97k 4.71k 84.48k 4.71k

# Query size 7.85M 64 597 135.90 151.03 27.56 112.70

# Profile

# Rank Query ID Response time Calls R/Call V/M Item

# ==== ================== ================== ===== ======== ===== ========

# 1 0xF1132168DB0BFC57 3905525.5745 72.6% 25586 152.6431 61.61 UPDATE connectinfo

# 2 0xD4B317E755A0ABD7 1344378.8719 25.0% 32791 40.9984 30... SELECT connectinfo

# 3 0xE23849EE6FB19DAE 66663.3148 1.2% 842 79.1726 62.99 INSERT errormessage

...

# Query 1: 7.52 QPS, 1.15kx concurrency, ID 0xF1132168DB0BFC57 at byte 16243195

# This item is included in the report because it matches --limit.

# Scores: V/M = 61.61

# Time range: 2015-09-24 01:03:17 to 02:00:00

# Attribute pct total min max avg 95% stddev median

# ============ === ======= ======= ======= ======= ======= ======= =======

# Count 42 25586

# Exec time 72 3905526s 113s 2720s 153s 271s 97s 124s

# Lock time 98 3168077s 109s 312s 124s 118s 14s 118s

# Rows sent 0 0 0 0 0 0 0 0

# Rows examine 0 1.33M 0 4.80k 54.39 0 504.65 0

# Query size 48 3.78M 149 157 154.94 151.03 0.52 151.03

# String:

# Hosts 192.168.10.147 (2724/10%)... 9 more

# Users gate

# Query_time distribution

# 1us

# 10us

# 100us

# 1ms

# 10ms

# 100ms

# 1s

# 10s+ ################################################################

# Tables

# SHOW TABLE STATUS LIKE 'connectinfo'\G

# SHOW CREATE TABLE `connectinfo`\G

update connectinfo set DeviceTag='10705BDDCD000000', ConnectIPAddress='115.231.63.78', UpdateDatetime=now() where ID=6912 and AppType=0\G

# Converted for EXPLAIN

# EXPLAIN /*!50100 PARTITIONS*/

select DeviceTag='10705BDDCD000000', ConnectIPAddress='115.231.63.78', UpdateDatetime=now() from connectinfo where ID=6912 and AppType=0\G

PS:这个问题解决后没几天,数据库又出问题,以为又是慢SQL什么的,结果是交换机网口问题。。。数据传输太慢,导致主从不同步。。。

参考链接:

mysql 慢日志分析

慢日志按时间截取

MySQL 之 slow log

MySQL优化—工欲善其事,必先利其器(2)

日志常用统计技巧

性能优化之MySQL优化(一)

mysqlsla的安装与使用

安装DBI组件。 Can’t locate DBI.pm in @INC-mysql接口

Issue 12: Can’t locate Time/HiRes.pm

打开MySQL的慢查询记录

“Can’t locate ExtUtils/MakeMaker.pm” while compile git

analysing slow MySQL queries with pt-query-digest

mysqldump备份原理

mysqldump –single-transaction, yet update queries are waiting for the backup

mysql 利用binlog增量备份,还原实例

MySQl备份恢复策略(完全+增量备份策略)